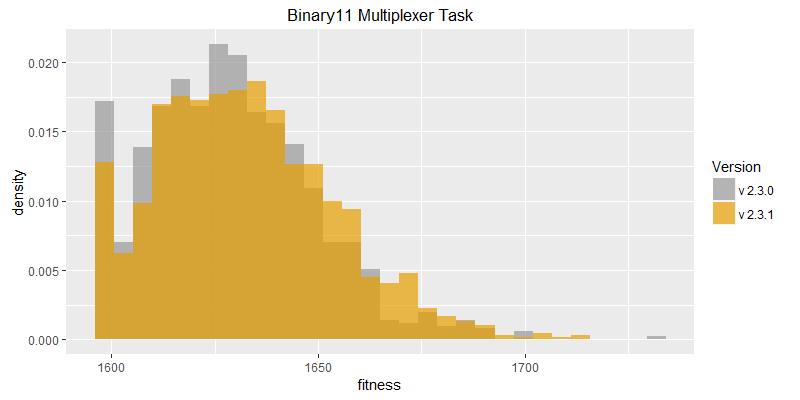

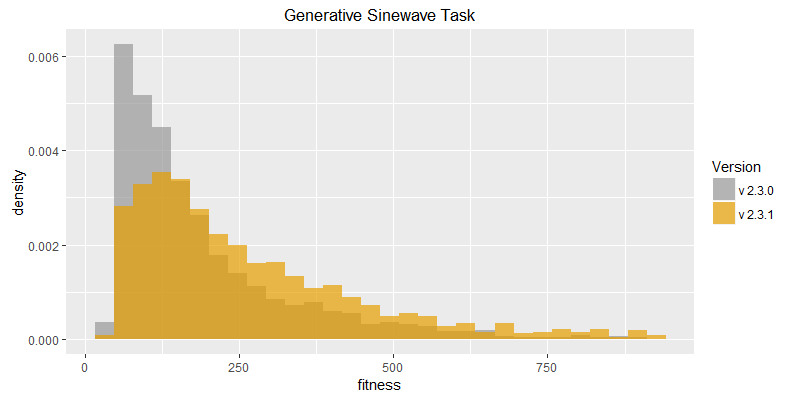

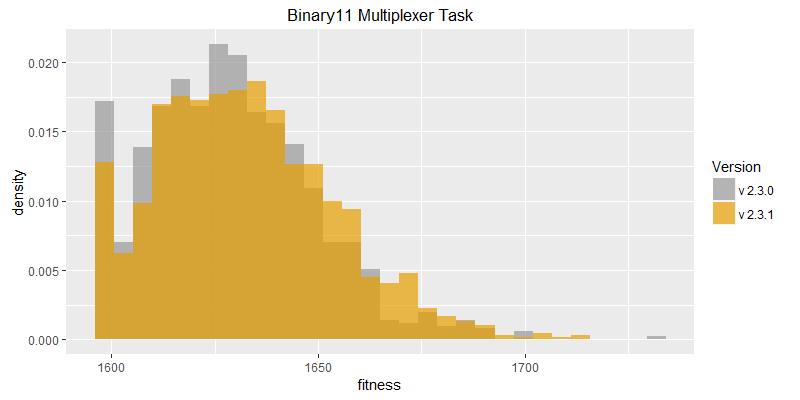

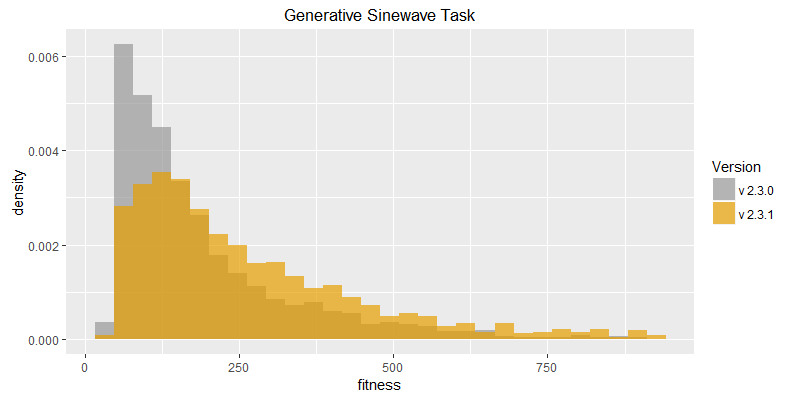

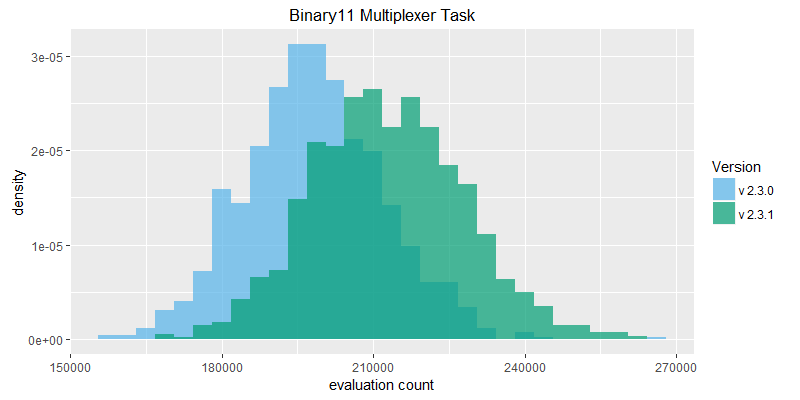

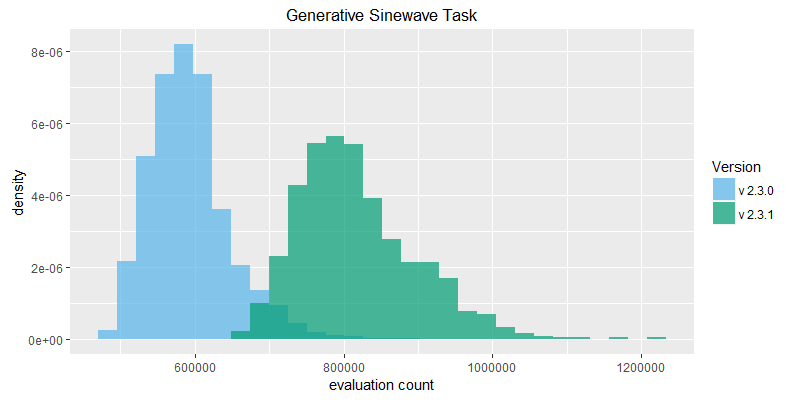

Comparison of best fitness histograms obtained from SharpNEAT v2.3.0 and v2.3.1;

on the Binary11 Multiplexer and Generative Sinewave tasks.

This version contains no major functional changes. This is primarily a marker release for the recording and reporting of efficacy sampler results following a small change to the efficacy sampler program's runtime environment, a change that has resulted in a substantial performance improvement, in turn requiring a re-run of the efficacy sampling experiments on the two benchmark tasks in order to obtain baseline results to compare future improvements against.

The change in question is a config setting added to the standard .NET application config file that enables a 'server' garbage collection (GC) algorithm. Without this setting the default 'client' GC algorithm is used, and this seems wholly unsuitable for the intensive multi-threaded CPU workload that SharpNEAT results in.

In particular the client mode GC will sporadically crash with the following error:

System.ExecutionEngineException occurred HResult=0x80131506

This crash sometimes occurs within a few minutes of running efic.exe (the efficacy sampler program that runs SharpNEAT in a continuous loop); on other occasions it has occurred several days into execution. Using the server mode GC so far appears to have resolved this problem, and has also resulted in a significant increase in overall execution speed. The relevant config setting is:

<runtime>

<gcServer enabled="true"/>

</runtime>

Note that the main SharpNEAT GUI program already contains this setting. It was omitted in error when creating the efficacy sampler program.

Essentially the client mode GC contains a defect, but one that only occurs when using that GC in an environment to which it is not well suited, hence the performance loss. However it is possible to side step the issue by using the GC algorithm more suited to SharpNEAT's CPU workload.

It is my understanding that the client GC attempts to maximize responsiveness of interactive programs, as such it will attempt to perform some GC activity concurrently with the running program threads; whereas the server GC uses a more traditional approach of freezing all program threads before performing GC activity. The server GC could result in pauses and stutter in a UI based application, but is more suited to CPU intensive workloads because it results in better performance overall, and less thread context switching and thread sync lock blocking.

These two new classes ensures that the neural net output values are always in the interval [0,1] even if the activation output interval is beyond that range. This in turn removes the requirement that activation functions output in the interval [0,1], which allows for performance optimisations in their implementations.

Efficacy sampling was performed on the two standard benchmark tasks and the results compared between this and the previous version (version 2.3.0). The resulting best fitness histograms are provided below. To recap, these histograms show the best fitness achieved on each of a large number of independent SharpNEAT runs, terminating after one minute of execution. Histograms are also provided comparing the evaluation counts achieved in each 60 second run.

The evaluation count histograms demonstrate the substantial performance improvement obtained by enabling the server mode garbage collection algorithm. The Generative Sinewave task has obtained the greatest performance boost, and this is likely because it spends proportionally more time in the NEAT algorithm (reproduction, mutation, crossover, speciation, etc.) compared to the Binary11 task, which spends proportionally more time in the neural network activation code, and is therefore more responsive to performance improvements in the neural network code (which operates on a static neural net model with minimal need for memory allocatiosn and thus garbage collection)

It is hoped that the efficacy sampling evidence provided will form a good baseline for future releases to be compared against.

OS Name: Microsoft Windows 10 Home SP0.0 Architecture: 64-bit .NET Framework: 4.6.1 (CLR 4.0.30319.42000) CPU Brand: GenuineIntel Name: Intel Core i7-6700T CPU @ 2.80GHz Architecture: x64 Cores: 4 Frequency: 2808 RAM: 16 GB

Colin,

May 18th, 2017

Copyright 2017 Colin Green.

Copyright 2017 Colin Green.